A brief history of open data

From eight simple principles to today's vast ecosystem, here's what's happening -- and how to take full advantage.

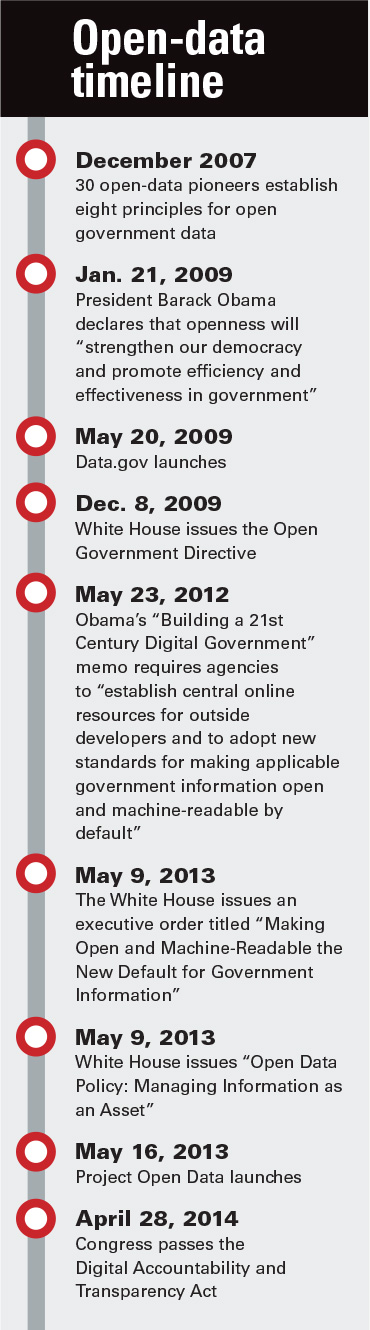

In December 2007, 30 open-data pioneers gathered in Sebastopol, Calif., and penned a set of eight open-government data principles that inaugurated a new era of democratic innovation and economic opportunity.

"The objective…was to find a simple way to express values that a bunch of us think are pretty common, and these are values about how the government could make its data available in a way that enables a wider range of people to help make the government function better," Harvard Law School Professor Larry Lessig said. "That means more transparency in what the government is doing and more opportunity for people to leverage government data to produce insights or other great business models."

The eight simple principles -- that data should be complete, primary, timely, accessible, machine-processable, nondiscriminatory, nonproprietary and license-free -- still serve as the foundation for what has become a burgeoning open-data movement.

In the seven years since those principles were released, governments around the world have adopted open-data initiatives and launched platforms that empower researchers, journalists and entrepreneurs to mine this new raw material and its potential to uncover new discoveries and opportunities. Open data has drawn civic hacker enthusiasts around the world, fueling hackathons, challenges, apps contests, barcamps and "datapaloozas" focused on issues as varied as health, energy, finance, transportation and municipal innovation.

In the United States, the federal government initiated the beginnings of a wide-scale open-data agenda on President Barack Obama's first day in office in January 2009, when he issued his memorandum on transparency and open government, which declared that "openness will strengthen our democracy and promote efficiency and effectiveness in government." The president gave federal agencies three months to provide input into an open-government directive that would eventually outline what each agency planned to do with respect to civic transparency, collaboration and participation, including specific objectives related to releasing data to the public.

In May of that year, Data.gov launched with just 47 datasets and a vision to "increase public access to high-value, machine-readable datasets generated by the executive branch of the federal government."

When the White House issued the final draft of its federal Open Government Directive later that year, the U.S. open-government data movement got its first tangible marching orders, including a 45-day deadline to open previously unreleased data to the public.

Now five years after its launch, Data.gov boasts more than 100,000 datasets from 227 local, state and federal agencies and organizations.

"In May 2009, Data.gov was an experiment," Data.gov Evangelist Jeanne Holm wrote last month to mark the anniversary. "There were questions: Would people use the data? Would agencies share the data? And would it make a difference? We've all come a long way to answering those questions."

The Obama administration continues to iterate and deepen its open-data efforts, most recently via a May 2013 executive order titled "Making Open and Machine-Readable the New Default for Government Information" and a supplementary Office of Management and Budget memo under the subject line of "Open Data Policy: Managing Information as an Asset," which created a framework to "help institutionalize the principles of effective information management at each stage of the information's life cycle to promote interoperability and openness."

The directive also established the creation of Project Open Data, managed jointly by OMB and the Office of Science and Technology Policy. The centralized portal seeks to foster "a culture change in government where we embrace collaboration and where anyone can help us make open data work better," U.S. Chief Technology Officer Todd Park and CIO Steven VanRoekel wrote in May 2013 to announce the effort.

Fully hosted on the social coding platform GitHub, Project Open Data offers tools, resources and case studies that can be used and enhanced by the community. Resources include an implementation guide, data catalog requirements, guidance for making a business case for open data, common core metadata schema, a sample chief data officer position description and more.

Staying true to the spirit of openness

As governments begin to implement open-data policies, following a flexible, iterative methodology is essential to the true spirit of openness. Through this process, Open Knowledge -- an international nonprofit organization devoted to "using advocacy, technology and training to unlock information" -- recommends keeping it simple by releasing "easy" data that will be a "catalyst for larger behavioral change within organizations," engaging early and actively, and immediately addressing any fear or misunderstanding that might arise during the process.

Open Knowledge suggests four "simple" steps in the open-data implementation process:

- Choose your dataset(s).

- Apply an open license.

- Make the data available.

- Make it discoverable.

From a more granular agency perspective, Project Open Data offers the following protocol:

- Create and maintain an enterprise data inventory.

- Create and maintain a public data listing.

- Create a process to engage with customers to help facilitate and prioritize data release.

- Document if data cannot be released.

- Clarify roles and responsibilities for promoting efficient and effective data release.

Furthermore, the technical fundamentals of open data include using machine-readable, open file formats such as XML, HTML, JSON, RDF or plain text -- as opposed to the pervasive, proprietary Portable Document Format created by Adobe. Former Philadelphia Chief Data Officer Mark Headd famously said, "When you put a PDF in your [GitHub] repo, an angel cries."

Put a license on it

Although government content is not subject to domestic copyright protection, it is important to put an open license on government data so that there is no confusion about how or whether it can be repurposed and distributed.

In general, there are two options that meet the open definitions for licensing public data: public domain and share-alike.

Much like the original eight open-government principles established in 2007, Open Knowledge outlines 11 conditions that must be met for data to qualify as open. (The White House has seven similar principles.) Those conditions relate to access, redistribution, reuse, attribution, integrity and distribution of license. They also specify that there should be no discrimination against people, groups or fields of endeavor; there should be no technological restrictions; and a license must not be specific to a package or restrict the distribution of other works.

"In most jurisdictions, there are intellectual property rights in data that prevent third parties from using, reusing and redistributing data without explicit permission," Open Knowledge states in its "Open Data Handbook." "Even in places where the existence of rights is uncertain, it is important to apply a license simply for the sake of clarity. Thus, if you are planning to make your data available, you should put a license on it -- and if you want your data to be open, this is even more important."

The chief data officer's role

As open data's importance grows, an official government function has evolved in the form of the chief data officer.

Cities, states, federal agencies and even countries are beginning to establish official roles to oversee open-data implementation. Municipalities (San Francisco, Chicago, Philadelphia), federal agencies (Federal Communications Commission, Federal Reserve Board), states (Colorado, New York) and countries (France) have, had or plan to have an executive-level chief data officer.

According to Project Open Data's sample position description, the chief data officer is "part data strategist and adviser, part steward for improving data quality, part evangelist for data sharing, part technologist, and part developer of new data products."

As with the newly popular chief innovation officer, the verdict is still out on whether that role and title will be widely adopted or will simply fall under the auspices of the agency CIO or CTO.

Building an open-data strategy

As governments begin to execute sustainable, successful open-data plans, Data.gov's Holm recommends that agencies create teams of key stakeholders composed not just of policy-makers but also internal data owners, including contractors and partners. The teams should think strategically about their mission and what they want to accomplish, and brainstorm how others might use the data.

For example, the Interior Department announced the creation of a Data Services Board responsible for the agency's data practices, saying in a September 2013 memo that "it is crucial that this activity include mission and program individuals as well as IT specialists so that the entire data life cycle can be addressed and managed appropriately."

Holm recommends that such teams meet every two to four weeks to accomplish their work and determine an appropriate frequency for getting together to maintain momentum.

As more and more innovative government executives begin to strategize about the treasure trove of public information that could be set free and transform the way we live, the ecosystem's potential is unlimited.