Data center innovation: New ways to save energy

Revised thermal standards and an array of cooling techniques promise to reverse the data center power drain.

When one reads "data center" and "energy efficiency" in the same sentence, it is often in reference to companies such as Facebook or Google and their investments in green computing.

The typical data center, however, has yet to absorb the conservation practices of the top-tier energy savers. The ongoing task of powering up and cooling down a data center continues to consume plenty of kilowatt-hours, so there's ample room for improvement in most computer rooms.

"A lot of the federal facilities and the private industry facilities have been slow to adopt the changes that some of the big players have done," said William Tschudi, leader of the High Tech and Industrial Systems Group at Lawrence Berkeley National Laboratory.

Tschudi's group was recently designated the Energy Department's Center of Expertise for energy efficiency in data centers. Part of the center's mission is to provide tools, best practices and technologies to help federal agencies improve their energy efficiency.

Although data center leaders have heeded the efficiency message, other facilities have yet to adopt their techniques on a widespread basis. "A lot of that hasn't trickled down to the main market," Tschudi said. "We are trying to get the whole market to move."

Data centers can avail themselves of an array of energy-saving practices. Industry-accepted environmental guidelines now tolerate higher temperature and humidity levels in data centers, which opens the way for more cooling options than were previously viable, including "free" methods such as evaporative cooling and emerging techniques such as immersion cooling.

Other methods look for energy savings in nontraditional areas such as server-to-server communications. Such innovations, combined with more prosaic approaches to trimming energy use, add up to potentially massive savings.

Why it matters

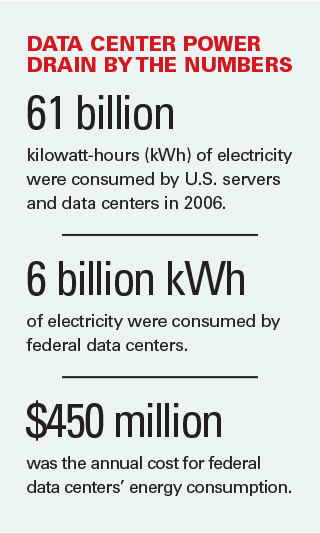

Concerns about the data center power drain are not new. A 2007 Environmental Protection Agency report states that U.S. servers and data centers consumed some 61 billion kilowatt-hours (kWh) of electricity in 2006 -- or 1.5 percent of the nation's total electricity consumption. Federal data centers accounted for 10 percent, or 6 billion kWh of electricity, at an annual cost of $450 million, according to the report.

Last year, the Digital Power Group reported that the world's information and communications technology (ICT) sector consumes about 1,500 terawatt-hours of electricity per year, an amount comparable to the total electricity generation of Japan and Germany. The report contends that the ICT ecosystem's consumption approaches 10 percent of worldwide electricity generation.

Pulkit Grover, an assistant professor of electrical and computer engineering at Carnegie Mellon University, said the power drain will continue to increase. He cited studies by Belgium's Ghent University that predict ICT will consume 15 percent of the world's energy by 2020.

Increasingly power-dense data center racks contribute to the situation. High-density computing generates more heat and, naturally, requires more cooling.

Tony Evans, director of the government sales team in Schneider Electric's IT division, said the ongoing Federal Data Center Consolidation Initiative is creating data centers that pack more computing punch in a smaller footprint but require more electricity than lower-density environments.

Federal legislation is beginning to target this area. In March, the House passed the Energy Efficiency Improvement Act (H.R. 2126), which, among other things, calls for federal agencies to increase the energy efficiency of the data centers they operate.

The Federal IT Acquisition Reform Act, meanwhile, tasks federal CIOs with creating data center optimization plans that take into account energy use. The House passed that bill in February.

The fundamentals

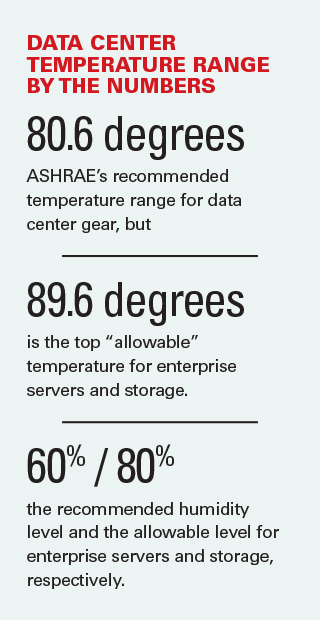

ASHRAE, a global organization credited with establishing de facto thermal standards for data centers, has raised the climate threshold for such facilities. Although temperatures around 68 degrees Fahrenheit were once the norm, ASHRAE's recommended temperature range for data center gear now goes to 80.6 degrees, and the top "allowable" temperature for enterprise servers and storage is 89.6 degrees. The recommended humidity range goes as high as 60 percent, and the allowable range goes as high as 80 percent for enterprise servers and storage.

"If you walk into a data center and it's cold, there is typically an efficiency opportunity there," Tschudi said. "You don't need to have it be a meat locker."

He added that the shift in guidance has opened up new opportunities for cooling methods. Progressive organizations are already taking advantage of the change, and Tschudi's group is spreading the word to data center operators who are not yet aware of the thermal recommendations.

Liquid cooling methods are among the approaches getting more exposure in the new environment. For years, traditional air conditioning systems have worked in conjunction with water-based chillers to beat the heat in computer rooms. But recent developments give water a more direct role in replacing or supplementing air conditioning.

Tschudi said his group has worked with the Maui High Performance Computing Center, an Air Force Research Laboratory center managed by the University of Hawaii where water-cooled systems have eliminated the need for compressor-based cooling. The Maui approach is referred to as direct water cooling.

Evaporative cooling, sometimes called free cooling, involves the installation of external towers that use outdoor air to cool water that circulates back into the data center to remove the heat from computing gear.

Although water effectively removes heat and conserves energy, it can be a scarce resource. Some cooling systems use wastewater instead of the potable variety. According to the Baltimore Sun, the National Security Agency plans to use treated wastewater supplied by Howard County, Md., to cool a data center scheduled to open in 2016.

"This project will deliver a long-term source of high-quality makeup water for the agency's cooling towers in lieu of using Fort Meade domestic 'drinking' water," an NSA spokesman said. Makeup water is used to replace water lost during evaporation.

"Reclaimed water, which is highly treated effluent, will be pumped to the agency cooling towers to replace the condenser water that is lost through evaporation, which occurs because they are located outside and are open to the atmosphere," the spokesman said. "The cooling tower is an integral part of the chiller plant system, which produces the chilled water for cooling the data centers and buildings."

Nevertheless, Tschudi said water-based cooling is not a widespread practice in the federal sector, and the Center of Expertise's ultimate goal is to accomplish cooling without the use of water.

"In a lot of places in the country, water is a problem," he said.

With that challenge in mind, pilot projects and a few production environments use immersion cooling, in which processors are submerged in tanks filled with non-conducive fluid. Hong Kong-based Allied Control uses 3M's Novec Engineered Fluids to cool a 500 kW system used in bitcoin mining, said Il Ji Kim, marketing manager at 3M Electronics Markets Materials.

The system, which has been in production since October 2013, has allowed Allied Control to cut cooling costs by 95 percent. Work is underway to test the technique's potential in the federal space.

Lawrence Berkeley lab plans to pilot immersion cooling under the Defense Department's Environmental Security Technology Certification Program in partnership with 3M, Intel, Silicon Graphics International (SGI) and Schneider Electric, among others. The system will be assembled and tested at an SGI facility in Wisconsin and then shipped to the Naval Research Laboratory.

Tschudi said he believes immersion cooling will first attract high-performance computing centers and eventually trickle down to consolidated, high-density data centers.

"The beauty of it is that it doesn't need any special environment," Tschudi said. "It doesn't need to be on a raised floor. So the savings should be pretty great on the infrastructure side."

Kim added that immersion cooling does not generate the noise associated with fan-based air cooling, which can prove problematic for military applications.

Cooling is an important contributor to a data center's energy consumption, but it's not the only one. Carnegie Mellon's Grover said networking usually accounts for 10 percent to 20 percent of a data center's energy consumption. Earlier this year, he was awarded a five-year, $600,000 National Science Foundation grant to conduct research into energy consumption in large data center communications networks.

Grover said that regardless of the distance between servers, a communication link's energy use does not change. He and his team are developing a protocol that can be built into a hardware device that would adjust itself to the communications distance to minimize energy consumption.

The hurdles

Investments in existing cooling systems and the cost of introducing new techniques limit the ability of organizations to acquire systems with potentially greater energy-saving potential. But industry executives say agency data centers can pursue a number of no-cost or low-cost efficiency measures while leaving their existing cooling systems in place.

Raising the data center's temperature from the previous norm of 68 or 69 degrees to 72 or 73 degrees can make a big difference, said Rona Newmark, vice president of intelligent energy efficiency strategy at EMC.

"You're saving a tremendous amount of [kilowatt-hours] just by raising the temperature," she said.

Another relatively simple step is tidying the cables under the raised floor to promote better airflow. Schneider Electric's Evans said using blanking panels in a server rack can also have huge benefits at minimal cost. Blanking panels put a lid on unused rack space so hot air isn't sucked back into the rack.

The segregation of hot and cold air is an increasingly common way to improve data center efficiency. Hot aisle/cold aisle containment techniques vent server-generated heat into an aisle that is closed off from the cold aisle. Newmark said the method confines heat to an area where it won't create issues for equipment. In addition, the approach prevents cold air from being contaminated with hot air, which saves energy because cold air can be circulated with less air pressure, she said.

NEXT STORY: NNSA tests cloud-based data-collection system