Rubio warns on 'deep fakes' in disinformation campaigns

Sen. Marco Rubio (R-Fla.) sounded the alarm over the extent to which the American populace remains vulnerable to tech-powered misinformation campaigns, particularly false and doctored videos.

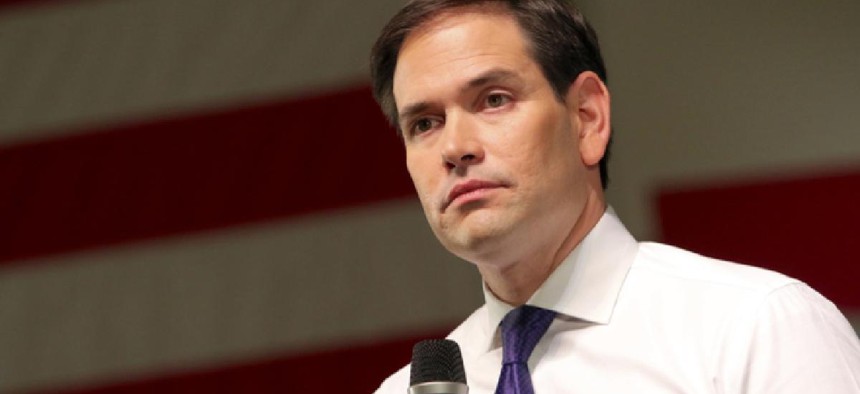

Marco Rubio on the campaign trail in 2016. (Photo credit: Rich Koele/Shutterstock.com)

A U.S. senator sounded the alarm over the extent to which the American public remains vulnerable to tech-powered misinformation campaigns, particularly false and doctored videos.

Speaking at an Atlantic Council event, Sen. Marco Rubio (R-Fla.) said that a cultural shift is needed to protect open democracies and their elections from a variety of disinformation techniques. He then warned about the impact of deep learning and artificial intelligence algorithms, known as deep fakes, that combine and superimpose different audio and visual sources to create an entirely new (and fake) video that can fool even digital forensic and image analysis experts.

Rubio argued that such videos may only need to appear credible for a short window of time in order to impact an election.

"One thing the Russians have done in other countries in the past is, they've put out incomplete information, altered information and or fake information, and if it's done strategically, it could impact the outcome of an [election]," Rubio said. "Imagine producing a video that has me or Sen. [Mark] Warner saying something we never said on the eve of an election. By the time I prove that video is fake -- even though it looks real -- it's too late."

Rubio, who has warned about the impact of deep-fake technology in the past, is part of a growing group of policymakers and experts to fret over the impact of false or doctored videos on electoral politics. Earlier this year comedian Jordan Peele and BuzzFeed released a now-viral video that used deep-fake technology to depict former President Barack Obama (voiced by Peele) uttering a number of controversial statements, before warning the viewer about the inherent dangers that such tools pose.

The technology is far from flawless, and in many cases a careful observer can still spot evidence of video inconsistencies or manipulation, but as Chris Meserole and Alina Polyakova noted in a May 2018 article for the Brookings Institution, "bigger data, better algorithms and custom hardware" will soon make such false videos appear frighteningly real.

"Although computers have long allowed for the manipulation of digital content, in the past that manipulation has almost always been detectable: A fake image would fail to account for subtle shifts in lighting, or a doctored speech would fail to adequately capture cadence and tone," Meserole and Polyakova wrote. "However, deep learning and generative adversarial networks have made it possible to doctor images and video so well that it's difficult to distinguish manipulated files from authentic ones."

As the authors and others have pointed out, the algorithmic tools regularly used to detect such fake or altered videos can also be turned around and used to craft even more convincing fakes. Earlier this year, researchers in Germany developed an algorithm to spot face swaps in videos. However, they found that "the same deep-learning technique that can spot face-swap videos can also be used to improve the quality of face swaps in the first place -- and that could make them harder to detect."

Rubio argued that deep fakes can and will become more sophisticated as they evolve from the realm of amateur entertainment to become misinformation tools of governments that possess far more money and resources.

"That's already happening, people are doing it for fun with off the shelf technology," said Rubio. "Imagine it in the hands of a nation state."